Note: this post has been edited from the original to reflect initial feedback. Most notably, the introductory framing has been changed to focus more on the gap itself and closing it. The second change follows the comment from nemobis. The post now makes the distinction that this analysis only refers to the English Wikipedia.

Introduction

There is a large gap in the number of men and women who contribute to Wikipedia. Researchers have been studying the gender gap in participation between men and women on the website since at least 2007 when it’s usage exploded to tens of thousands of editors. Most of this research has been on the English-language Wikipedia and the results have consistently shown that the ratio of male to female editors has been at least 4 to 1 since 2007. Furthermore, recent statistics suggest the gap as only increased since that time. The gender gap includes not only who edits Wikipedia, but also in the number of edits men and women make, whose contributions persist in articles and whose get deleted, and how long someone remains an editor on Wikipedia. These numerical differences have arguably contributed to a number of systemic biases in the encyclopedia including imbalances in the topics represented in the world’s encyclopedia, its inapproachable design, and an often hostile editorial culture.

In this post, I simulate the effect of different strategies that can be used to reduce the gender gap on the English Wikipedia. First, I look at increasing retention among new and existing female editors. The results indicate that this is a difficult if not impossible approach because of the large gender gap among first time editors. Second, I look at whether increasing the number of new female editors could close the gap by increasing the pool of first time female editors. The results are slightly more positive here but, even in this, drastic changes would have to be made to Wikipedia as it is. Overall, the results indicate that incremental changes to these areas of English Wikipedia are woefully incapable of bringing gender parity within the next decade. At the end, I discuss some policy solutions for Wikipedia and highlight three desperately needed areas for further research several commentators have noted.

Analytic Approach

I simulate monthly population replacement for active editors on the English-langauge Wikipedia over the next ten years. The general formula I use for monthly active editors is: last month’s editors * editor retirement rate + new editors * new editor retention rate = current month’s editors. I take estimates of these parameters, especially as they differ by gender, and predict how large the gender gap will be over the next ten years if we vary editor retention or editor recruitment. First, let’s start with the current numbers:

Monthly Active Editors: 35,000, 28,000 male + 7,000 female editors

While 125,000 people edited the English Wikipedia last month, few are considered active editors. Active editors are typically calculated as those who make more than 5 edits in a month. Last month, Wikimedia statistics counted 33,000 active editors in March (roughly 26% of all editors). For my projections, I round it to 35,000 since I like round numbers and it doesn’t matter for the overall point as we’ll see. Now, the number of male and female editors is unknown, but estimates range between 9 and 23 percent. The best estimate is 23% but it’s dated to the height of Wikipedia’s editing which likely over-estimates the prevalence of female editors who have lower retention rates than male editors. Again, rounding to 20% makes the numbers easy to consider and provides a bit more optimism to this generally dim story. So, 20% of 35,000 is 7,000 female editors and 35-7 = 28,000 male.

Monthly Retiring Editors: 2.1%

A study done in 2009 found that active editors on English Wikipedia remained active over the course of a year at a rate of about 75 to 80%. This number is likely to be lower as the researchers found that more recently recruited editors have higher attrition rates than editors who joined the English Wikipedia earlier. I divide 25% by 12 to turn the annual estimate of 25% loss into a monthly estimate and get a monthly rate of retiring editors equal to 2.1%. Another way to say this is that 97.9% of active editors are expected to be active next month.

Monthly New Editors: 1200 women with a 7.98% retention rate and 4,800 men with a 10.5% retention rate.

The rate of new editors actually involves two parameters: the number of new male and female editors and the rate at which new users remain on English Wikipedia. Going back to Wikimedia statistics, there were 6,600 new English users in March. I round it to 6,000. Re-using the 20% female statistic from earlier (also generally confirmed among new editors here), this gets us 1,200 new female editors per month and 4,800 new male editors.

An earlier paper found a retention rate of roughly 17% and 13% for male and female editors on English Wikipedia (if I’m reading Figure 4 in the paper correctly). However, this is higher than the 10% reported by the earlier editor retention study on Wikipedia. To translate this gender inequality into a estimate of current inequality, I used the .13:.17 ratio to estimate the percent of new female and male editors retained required to produce a 10% global overall average. Long story short, I estimate new male editors stick around at a rate of about 10.5% and new female editors stick around at a rate of about 7.98%.

So, for male editors in a given month, the formula is: past month female editors*.979 editor retention+4,800 new males * .105 new male retention

For female editors in a given month, the formula is: past month female editors*.979 editor retention + 1,200 new females * .0798 new female retention

Hypotheses

- Increasing the rate at which new female editors are retained will increase gender parity, bringing the gender gap in monthly active editors to parity by 2016.

- Decreasing the rate at which existing female editors retire will increase gender parity, bringing the gender gap in monthly active editors to parity by 2016.

- Increasing the number of new female editors will increase gender parity, bringing the gender gap in monthly active editors to parity by 2016.

Results

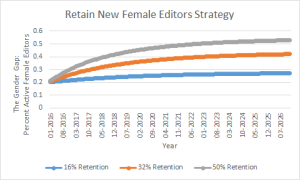

New Editor Retention: Editor retention and engagement are big issues for Wikipedia in general and especially for Wikipedians interested in closing the gender gap. A range of things have been attributed to the weak editor retention including the culture and bureaucratization. The results here indicate that retention is not an easy solution. In the figure below, I project the percent of monthly users who are female through 2026.

The blue line representing a 16% retention rate for new female editors is what happens to the gender gap if we double the rate at which new female editors become monthly active female editors. Doubling our effectiveness closes the gap 14 points from 20% women (a 60% gap) to 27% women (a 46% gap) over the course of a decade. The orange line represents a quadrupling of our current efficacy and, in a decade, gets us to 40% female editors, a 20% gap. Finally, the grey line represents retaining half of all new female editors closes the gap in a little over five years. Unfortunately, English Wikipedia has never had a retention rate over 40% according to the editor retention study.

The blue line representing a 16% retention rate for new female editors is what happens to the gender gap if we double the rate at which new female editors become monthly active female editors. Doubling our effectiveness closes the gap 14 points from 20% women (a 60% gap) to 27% women (a 46% gap) over the course of a decade. The orange line represents a quadrupling of our current efficacy and, in a decade, gets us to 40% female editors, a 20% gap. Finally, the grey line represents retaining half of all new female editors closes the gap in a little over five years. Unfortunately, English Wikipedia has never had a retention rate over 40% according to the editor retention study.

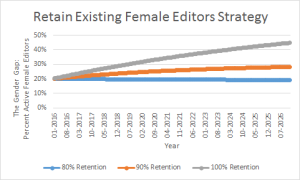

Existing Editor Retention: Currently, about 25% of editors retire every year or about 2.1% per month. In this simulation, we ask what happens if we were to increase the retention rate of existing female editors to 80%, 90%, or 100% while maintaining the existing retention rate for male editors at 75%.

The results are less optimistic than we’d like. Even if every current and future active, female editor continued editing for the next ten years, the gender gap would still be 10% (45% of editors would be female). Less extreme changes like increasing current female editor retention to 80% or 90% (which does occur among editors who joined in the early 2000’s and remain on English Wikipedia today) would do little to nothing to close the gap.

The results are less optimistic than we’d like. Even if every current and future active, female editor continued editing for the next ten years, the gender gap would still be 10% (45% of editors would be female). Less extreme changes like increasing current female editor retention to 80% or 90% (which does occur among editors who joined in the early 2000’s and remain on English Wikipedia today) would do little to nothing to close the gap.

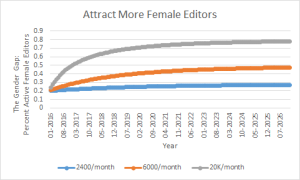

More New Female Editors: The final way to resolve the issue is by increasing the number of new female editors each month. The results here are somewhat more promising, but demonstrate the same general need for radically different practices.

The blue line represents a doubling of current effort from recruiting 1,200 new female editors per month to 2,400. In 10 years, that gets a monthly editor base that’s 27% women (a 46% gap). If we quintuple the number of new female editors per month to 6,000, slightly more than the number of men currently recruited, this results in a 47% female editor base in 10 years. Note, this means that if we achieve gender parity among new editors today, we still would not achieve gender parity among all editors within 10 years. The last curve is a fantastical one based on assuming Wikipedia recruited women at the same comparative rate it recruits men (4,800 is 20% of 24,000, I rounded in the graph). In this radical case, parity is reached in one year.

The blue line represents a doubling of current effort from recruiting 1,200 new female editors per month to 2,400. In 10 years, that gets a monthly editor base that’s 27% women (a 46% gap). If we quintuple the number of new female editors per month to 6,000, slightly more than the number of men currently recruited, this results in a 47% female editor base in 10 years. Note, this means that if we achieve gender parity among new editors today, we still would not achieve gender parity among all editors within 10 years. The last curve is a fantastical one based on assuming Wikipedia recruited women at the same comparative rate it recruits men (4,800 is 20% of 24,000, I rounded in the graph). In this radical case, parity is reached in one year.

Discussion

These numbers are meant to put into perspective the different levers at Wikipedia’s disposal to address the basic numerical problem of the gender gap. The results are striking in how difficult the issue of climbing out of gender disparity will be and the kind of radical change that would have to be made to see it happen within the next decade.

That said, there are several things I leave out. The first has to do with re-recruiting retired users. One parameter I don’t include in the model is the number of users who used to edit who return to editing in a given month. Given the historical gender disparity, we can assume that pulling from this pool offers the same issues of recruiting from a largely male-dominated pool. A second parameter is the ratio of editors to new editors. I mentioned that 125,000 people edited English Wikipedia last month, but only 33,000 could be considered active. If the rate at which these drive-by editors could be increased among women, then this may accomplish what the “Recruiting New Female Editors” strategy suggests.

The second and more problematic issue is spillover. For example, more likely than not, you cannot increase the retention rate of women without also increasing the retention rate of men. Whatever you do to encourage editors to edit will just as likely encourage male and female editors. Similarly, advertising to female-dominated venues will likely increase the number of male editors recruited as well. The simulations therefor are unrealistic, best-case scenarios for any strategy targeting only women.

Policy Implications

I want to interpret these results as policy priorities. The first of which is the scale of the solution needed to make any serious dent in the gender gap. Any real attempt to close the gender gap has to involve radical action. I call it radical because, as per the simulations, either the retention rate or the new editor recruitment rate would have to be higher than they have ever been in the history of Wikipedia every month for the next ten years just to close the gap in the next decade.

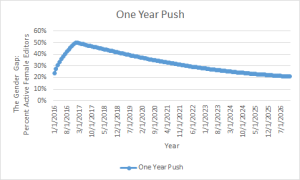

One positive result, indicated by the grey line in the third chart (24,000 new female editors a month), is that the gender gap can be closed within a year if a large, concerted effort is made to recruit new female editors. My first recommendation then is that there should be a large-scale effort to recruit 24,000 female editors per month. The figure below plots the simulation. A short burst of large-scale recruitment could end the gender gap in a year. If we stop the recruitment once Wikipedia hits 50% and assume retention rates and the gender disparity in new editors returns to what they were are now (which I believe they would change), it would take ten years for the gender gap to return to its current level.

Finally, one issue I do not address is how quickly the gap would close if we combined strategies and both increased the number of new female editors and increased new editor retention. This is a viable alternative and one whose success can be inferred from the analysis. Increasing new female editors to gender parity (4,800 female editors) and quadrupling new editor retention (to 32%) would close the gender gap in about three years. I believe a combination of increasing retention and increasing new female editors is a viable option. Underlying this whole discussion is a question of whether it is easier to increase retention or increase new monthly editors. As evident from my recommendation above, I believe increasing new editors is much easier than retaining existing ones.

The solution to optimizing a strategy lies in understanding what is easier or more feasible. Can Wikipedia increase the number of new female editors four-fold and increase new editor retention four-fold every month for three years? Or, can Wikipedia increase female editors by 2,000 times to 24,000 per month for one year? Or, can it increase editor retention 6 times to 50% every month for the next five years? The trade off is the cost of sustaining increases in retention versus new editor recruitment every month.

The ultimate question is what will it cost (in terms of money, time, and especially good-will) to increase these rates and how long can Wikipedia sustain that cost?

Future Directions

Based on a range of feedback from several early readers of this post, I’ve decided to mention what the next steps in this estimation should be. The goal of these next steps is to understand the ecology of editors and the current, historical, and global rates at which editors of different genders transition to different types of editing.

First, one correspondent suggested that only a few hundred editors are needed to change the culture of English Wikipedia because of how densely and socially important its core project organizers are. For me to understand this numerically, I believe we should try to identify these users statistically and determine their trajectories into the core. The question is where the core users come from and how often they retire. This is part of the larger question of how and when users transition from new to active to power-users to retirement and so on.

Picking up on this, another correspondent suggested that retention rates have changed such that my current numbers are much further off than I assume here. If the gender imbalance of new editors, retention rates, and retirement rates have all changed in the direction of increasing the gender gap, my estimates would be very far from current reality. A historical analysis amounting to an updating of the editor retention study is thus strongly recommended.

Finally, the first commenter notes that all of this applies to English Wikipedia and this is a very astute observation. I focus on the English Wikipedia because the research on gender and retention rates has already been done. Thus, I can run numbers rather than first having to find them. However, the analysis I suggest of repeating the editor retention study and understanding the ecology of users should be replicated for each major language Wikipedia to not only understand the ecologies of users and gender gaps in those communities, but also as a starting point for a more global understanding of the different kinds of gender dynamics across Wikis.